Linux RAID

Here is what we are going to cover:

- Setup RAID 10 Array

- Replace a Drive

- Setting Up RAID 1 On The Root Disk

- Disk Health with Smartmon Tools

Use ZFS Instead: In the past I used Linux software RAID with ext4 for my personal NAS. I have since converted it to ZFS. I would recommend using ZFS if you care about your data. Take a look at these two related pages:

Assumptions:

- You are running Ubuntu 16.04 or 18.04

- Most commands on this page are run as root. You may want to use sudo instead.

Ubuntu Linux RAID 10 Array Setup

The Linux software RAID subsystem allows for the creation of really excellent RAID 10 arrrays. For these instructions we are going to assume you are running Ubuntu 16.04. If you are running another distro or version package names, etc. might be different but mdadm should function mostly the same.

To start, we want to make sure we install mdadm. We run apt-get update to get a fresh view of what is on the repo. We then install the mdadm package.

apt-get update

apt-get install mdadm

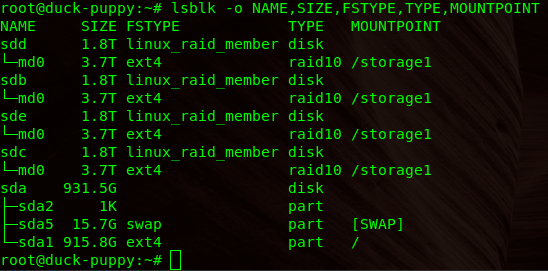

This will show relevant information about block devices on the system.

lsblk -o NAME,SIZE,FSTYPE,TYPE,MOUNTPOINT

This is what it looks like on my NAS after setting everything up.

Create the actual array. Specify all four devices. Other numbers of drives are valid but we will use four for this example.

mdadm --create --verbose /dev/md0 --level=10 \

--raid-devices=4 /dev/sdb /dev/sdc /dev/sdd /dev/sde

As an alternative it is also possible to create a four drive array with only two disks. The other two can be added later. You would use the keyword ‘missing’ in place of the second and third disk.

mdadm --create --verbose /dev/md0 --level=10 \

--raid-devices=4 /dev/sdb missing /dev/sdc missing

Check the details of the array.

mdadm --detail /dev/md0

cat /proc/mdstat

Save everything to the config file. The path to this file might be slightly different on other distros.

mdadm --detail --scan | sudo tee -a /etc/mdadm/mdadm.conf

cat /etc/mdadm/mdadm.conf

Create the filesystem on the array and mount it.

mkfs.ext4 -F /dev/md0

mkdir /storage1

mount /dev/md0 /storage1

df -h

Add the mount to the fstab file.

echo '/dev/md0 /storage1 ext4 defaults,nofail,discard 0 0' >> /etc/fstab

Unmount the filesystem for now.

umount /storage1

df -h

Mount it with a single argument (relying on the fstab entry).

mount /storage1

df -h

You might want to change the ownership if it will be used by another user other than root. If you had copied data onto the file system from somewhere else, you might want to do this recursively.

cd /storage1/

ls -la

chown -R user1:user1 .

ls -la

If you had left two disks out, you can add them in when ready. Add the two missing disks with the following commands.

mdadm /dev/md0 --add /dev/sdb

mdadm /dev/md0 --add /dev/sdd

Watch them get synced.

mdadm --detail /dev/md0

cat /proc/mdstat

You can use mdadm for monitoring. The command can be daemonized and can send emails, etc. This isn’t a real monitoring solution. If the daemon were to die you would silently lose your monitoring.

mdadm --monitor /dev/md0

Linux RAID - Replace a Drive

Assume we have two partitions on the drive.

Check serial number:

smartctl -a /dev/sdb|grep -i serial

Mark the drive failed

mdadm --manage /dev/md0 --fail /dev/sdb1

Check the status:

cat /proc/mdstat

Remove from the array:

mdadm --manage /dev/md0 --remove /dev/sdb1

Same for other partition:

mdadm --manage /dev/md1 --fail /dev/sdb2

mdadm --manage /dev/md1 --remove /dev/sdb2

Shut the system down:

shutdown -h now

- Physically swap the disk.

- Boot the system.

Partition it the same as an existing disk in the array:

sfdisk -d /dev/sda | sfdisk /dev/sdb

Verify partition table:

fdisk -l

Add the new disk to the array:

mdadm --manage /dev/md0 --add /dev/sdb1

mdadm --manage /dev/md1 --add /dev/sdb2

Watch it syncing and wait until it is complete:

cat /proc/mdstat

mdadm --detail /dev/md0

Linux - RAID 1 On The Root Disk - Setup

Install the tools you need:

apt-get install mdadm rsync initramfs-tools

- Partition second disk with fdisk / cfdisk

- same size partitions as first in sectors

- type should be fd ( linux raid )

Add the second disk to array:

mdadm --create /dev/md0 --level=1 --raid-devices=2 missing /dev/sdb1

mdadm --create /dev/md1 --level=1 --raid-devices=2 missing /dev/sdb2

Format the second disk:

mkswap /dev/md1

mkfs.ext4 /dev/md0

Add this line to the end ( not sure why ):

echo "DEVICE /dev/sda* /dev/sdb*" >> /etc/mdadm/mdadm.conf

mdadm --detail --scan >> /etc/mdadm/mdadm.conf

Run this:

dpkg-reconfigure mdadm

Check status:

watch -n1 cat /proc/mdstat # watch disk sync status if syncing

Run a command to do the following:

- select both /dev/sda and /dev/sdb (but not /dev/md0) as installation targets

- installs bootloader on both drives

- rebuild init ramdisk and bootloader with raid support

dpkg-reconfigure grub-pc

Copy from old drive to new one:

mkdir /tmp/mntroot

mount /dev/md0 /tmp/mntroot

rsync -auHxv --exclude=/proc/* --exclude=/sys/* --exclude=/tmp/* /* /tmp/mntroot/

Edit your fstab file and swap sda or uid for raid disk:

vi /tmp/mntroot/etc/fstab

##/dev/sda1

##/dev/sda2

/dev/md0

/dev/md1

Boot from second disk:

- At the GRUB menu, hit “e”

- Edit your boot options. Just put (md/0) and /dev/md0 in the right places.

set root='(md/0)'

linux /boot/vmlinuz-2.6.32-5-amd64 root=/dev/md0 ro quiet

Once the system is booted up run either of these commands to verify that your system using the RAID device as expected:

mount

df -h

Look for something like this:

/dev/md0 on / type ext4 (rw,noatime,errors=remount-ro)

- Partition first disk with fdisk / cfdisk

- Use the same size partitions as second in sectors

- Make sure the partition types are set to fd ( linux raid )

Add original disk to the array:

mdadm /dev/md0 -a /dev/sda1

mdadm /dev/md1 -a /dev/sda2

Check status and watch it sync:

watch -n1 cat /proc/mdstat

Update grub so that you will use the RAID device when you boot from either disk.

update-grub # update grub - probably not needed

dpkg-reconfigure grub-pc # because grub update didn't do both disks

Shutdown your system:

shutdown -h now

- Remove second drive and test booting

You should see the second drive displayed as disabled:

cat /proc/mdstat # should show second drive as disabled

- Add the drive back and start up

Once the system is up you can add the devices back to the array if they are missing:

mdadm /dev/md0 -a /dev/sdb1 # add it back

mdadm /dev/md1 -a /dev/sdb2 # add it back

- Do the same for the other disk to make sure you can boot from both.

Enable periodic RAID Checks to keep the disks in sync. These are built into the package.

dpkg-reconfigure mdadm

You could also create a cron job that does the following:

echo "check" > /sys/block/md0/md/sync_action

You can learn more HERE.

Disk Health with Smartmon Tools

Update repo info and install Smartmon tools.

apt-get update

apt install smartmontools

Get a list of disks. Either way works.

fdisk -l|grep -i "Disk "|grep -v ram|grep -v identifier

lsblk -o NAME,SIZE,FSTYPE,TYPE,MOUNTPOINT

Check drive status.

smartctl -a /dev/sdb

Grep for errors.

smartctl -a /dev/sdb|grep -i error

Run a short test. This will run in the background and will take a few minutes. Once complete it will show in the smartctl -a’ output.

smartctl -t short /dev/sde

Wait a few minutes and check the drive again.

smartctl -a /dev/sdb

Configure smartd to run weekly SMART checks:

/etc/smartd.conf

/dev/sda -a -d ata -o on -S on -s (S/../.././02|L/../../6/03)

/dev/sdb -a -d ata -o on -S on -s (S/../.././02|L/../../6/03)

Linux Raid Partition Types

For MBR partition tables either of these partition types will work but I would go with FD so that the disks can be detected as RAID. There are arguments for using DA instead of FD ( see the link to kernel.org in the references section ).

- 0xDA for non-fs data

- 0xFD for raid autodetect arrays

For GPT you woulduse this:

- 0xFD00 on GPT

Good Resources